NEWS

- You can now find the slides from the tutorial presentations here.

- We uploaded a VM disk image with the software and datasets for the tutorial here.

Abstract

The increasing need for rapid characterization and quantification of

complex environments has created challenges for data analysis. This

critical need comes from many important areas, including industrial

automation, architecture, agriculture, and the construction or

maintenance of tunnels and mines. 3D CAD models are necessary for

factory design, facility management, urban and regional planning On

one hand, precise 3D data is nowadays reliably obtained by using

professional 3D laser scanners. On the other hand, efficient,

large-scale 3D point clouds processing is required to process the

enormous amount of data. The tutorial will give insights to state of

the art acquisition methods and software for addressing these

challenges.

Motivation and Objectives

Recently, 3D point cloud processing became popular in the robotics

community due to the appearance of the Microsoft kinect camera. The

kinect is a structured light laser scanner that obtains a colored 3D

point cloud also called RGB-D image, with more than 300000 points at a

frame rate of 30Hz. The optimal range of the kinect camera is 1.2 to

3.5 meters and is well suited for indoor robotics in office or

kitchen-like environments. Besides the boost of 3D point cloud

processing through the kinect, the field of professional 3D laser

scanning has advanced.

Light Detection and Ranging (LiDAR) is a technology for

three-dimensional measurement of object surfaces. Aerial LiDAR has

been used for over a decade to acquire highly reliable and accurate

measurements of the earth's surface. In the past few years,

terrestrial LiDAR systems were produced by a small number of

manufacturers. When paired with classical surveying, terrestrial LiDAR

delivers accurately referenced geo-data.

The objective of the tutorial is to present the state of the art 3D

scanning technology and recent developments for efficient processing

of large scale 3D point clouds. Scenes scanned with LiDARs contain

often millions to billions of 3D points. The goal of the tutorial is

to give an overview of existing techniques and enable field

roboticists to use recent methods and implementations, such as

3DTK - The 3D Toolkit and the

Las Vegas

Reconstruction Toolkit. We create reference material for the

participants for subtopics like 3D point cloud registration and SLAM,

calibration, filtering, segmentation, and large scale surface

reconstruction.

To achieve the objectives and to gain hands-on experiences on the

problems occurring, when trying to process large-scale 3D point

clouds, the tutorial consists of presentations, software

demonstrations and software trials. To this end, participants have to

bring their Linux, MacOS or Windows laptops.

Intended Audience

The tutorial consists of several interleaved theoretical and practical

parts. This makes the tutorial well-suited for motivated students at

all levels (Bachelor, Master, and PhD students) as well as all

roboticists who want to gain hands-on experiences on the problems

occurring, when trying to process 3D data. Experts, escpecially

roboticists having experiences with the point cloud library, are

particularly welcomed as well.

List of Speakers

In alphabetical order:

- HamidReza Houshiar, Jacobs University Bremen gGmbH, Germany

- Andreas Nüchter, University of Würzburg, Germany

- Thomas Wiemann, Osnabrueck University, Germany

- TBA

Participation

To participate at the full-day tutorial please register for the

tutorial at the ICAR 2013

conference.

List of Topics

Introduction and Overview of Laser Scanning Systems

A general review of range sensor systems is given in the introduction

including triangulation and LiDAR systems. State of the art in

terrestrial large volume data acquisition is to use high resolution

LiDAR systems. These sensors emit a focused laser beam in a certain

direction and determine the distance to an object by measuring the

reflected light. By measuring the time difference between the emitted

and measured signal, the distance to an object surface can be

calculated. Laser range finders are distinguished by the method used

to determine the object's distance.

Pulsed wave systems emit a short laser flash and measure the time

until the reflected signal reaches the sensor. By the constant speed

of light, the distance is calculated (time-of-flight method). Since

the speed of light is as fast as 300000 km per second, a time

resolution of just about a few pico seconds is necessary to reach an

accuracy of about 10 mm. Depending on the intensity of the laser

light, very high maximum ranges (up to 1000 m) can be

achieved. Besides pulsed laser scanners, systems using continuous

light waves exist. They determine the running time of the laser light

by measuring the phase shift between the emitted and detected

signal. Since the phase shift is only unambiguous in the interval

between 0 and 180 degrees, their maximum range is limited, depending

on the wavelength of the laser light. Typical values are about 80

m.

Basic Data Structures and Point Cloud

Filtering

In this part of the tutorial we cover the problem of

storing the data. Due to large environments, the high amount of data,

and the desired precision (millimeter scale), grid based approaches,

that are commonly used for planar indoor environments in robotics do

not work well. We present different types of range images, an octree

with low memory footprint and k-d trees. As the choice of the data

structure depends on the algorithms, we identify the algorithmic

requirements, and how and which data structures support the tasks.

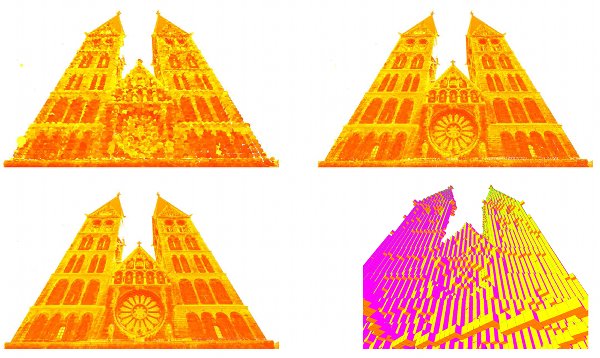

Three levels of

detail for an efficient octree-based visualization. A

corresponding octree cubes drawing is given in the bottom right

part.

Precise Registration and the SLAM Problem

After a precise 3D scanner captured its environment the data has to be

put into a common coordinate system. Registration aligns this

data. Computing precise registrations means solving the SLAM

problem. For general 3D point clouds, one has to work with six degrees

of freedom. Roughly the approaches can be categorized as follows:

Segmentation and Normal Estimation

Three core components of human perception are grouping, constancy, and

contrast effects. Segmentation in robot vision approaches this natural

way of observing the world by splitting the point clouds in components

with constant attributes, and grouping them together. On range images,

a few existing image based segmentation methods can be applied. Pure

point cloud segmentation methods rely only on geometry information. As

the local geometry of a point cloud is described by surface normals,

we presents methods for computing these normals efficiently.

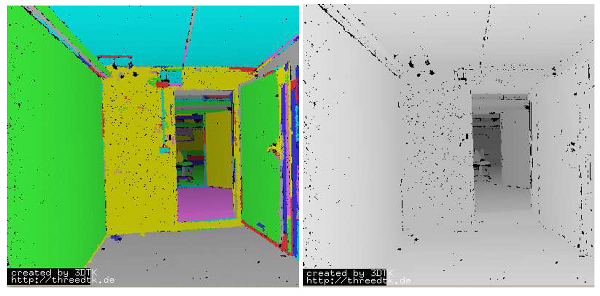

Normal

based segmentation of a 3D point cloud acquired in a room with an

open door.

Meshing and Polygonal Robot Map Generation

Three dimensional environment representations play an important role

in modern robotic applications. They can be used as maps for

localization and obstacle avoidance as well as environment models in

robotic simulators or for visualization in HRI contexts, e.g., tele

operation in rescue scenarios. For mapping

purposes, when building high resolution maps of large environments,

the huge amount of data points poses a problem. A common approach to

overcome the drawbacks of raw point cloud data is to compute polygonal

surface representations of the scanned environments. Polygonal maps

are compact, thus memory efficient, and, being continuous surface

descriptions, offer a way to handle the discretization problem of

point cloud data.

n the context of mobile robotics, polygonal environment maps offer

great potential for applications ranging from usage in

simulators, virtual environment modeling for tele

operation to robot localization by generating virtual scans via ray

tracing. Integrating color information from camera

images or point cloud data directly adds an additional modality to the

representation and offers the possibility to fuse a compact geometric

representation with local color information. However, creating

polygonal environment maps based on laser scan data manually is

a tedious job, hence we will present methods to automatically compute

polygonal maps for robotic applications that are implemented into the

Las Vegas Surface Reconstruction Toolkit. We will

demonstrate several use-cases for such maps like using them as

environment maps in Gazebo or for generation of synthetic point cloud

data for localization purposes using ray tracing.

Example

reconstruction using LVR. The input point cloud (left) is

automatically transferred into a textured polygonal

representation. The number of elements in the stored data was

reduced from 12 million colored points to 82.000 textured triangles

using 126 bitmaps.

Semantic 3D Mapping

A recent trend in the robotics community

is semantic perception, mapping and

exploration , which is driven by scanning scenes with RGB-D

sensors. A semantic map for a mobile robot is a map that

contains, in addition to spatial information about the environment,

assignments of mapped features to entities of known classes. Further

knowledge about these entities, independent of the map contents, is

available for reasoning in some knowledge base with an associated

reasoning engine. In this part of the tutorial we explore, how

background knowledge gives a boost to model-based object recognition

in large-scale 3D laser data.

Semantically labeled point cloud of an office scene. The

detected furniture was recognized using plane detection and

background knowledge about the planar relations within the

corresponding CAD models.

Schedule

The Tutorial will take place on Monday, the 25th of November. Each of

the parts will contain a lecture followed by software demonstrations

and programming sessions.

A detailed schedule will be released soon.

Supporting Materials

Here you can find the slides from the tutorial presentations:

3D Point Cloud Processing - Introduction

3D Point Cloud Processing - Basic Data Structures

3D Point Cloud Processing - Registration

3D Point Cloud Processing - Mobile Mapping

3D Point Cloud Processing - Features

3D Surface Reconstruction - Las Vegas Reconstruction

We have prepared a Virtual Machine Disk file containing all software

and data sets needed for the tutorial. You can obtain it as single

file or splitted into 4GB chunks for FAT file systems:

Full image (26 GB)

Splitted Image Part 1

Splitted Image Part 2

Splitted Image Part 3

Splitted Image Part 4

Splitted Image Part 5

Splitted Image Part 6

Splitted Image Part 7

Contact

Main Organizer

Andreas Nüchter

Informatics VII : Robotics and Telematics

Julius-Maximilians-University Würzburg

Am Hubland

D-97074 Würzburg

Germany

|

Co-Organizer

Thomas Wiemann

University of Osnabrück

Institute of Computer Science

Albrechtstraße 28

D-49069 Osnabrück

Germany

|

|